Overview

For a detailed introduction to the analysis framework see this page. The framework provides classes and basic tools for general-scope analysis. In order to create an analysis, one has to define some xml configuration files that drive the cut flow. The framework provides a tool calledJigsaw that can load a plugin (see below) which auto-configures and run your analysis. Standalone analyses are still possible for your convenience, but this approach is not suggested at the beginning.

Hands on

A successful test has been performed on Bologna Tier3 nodes.

ssh -X ${USER}@uibo-atlas-01.cr.cnaf.infn.it

bsub -q T3_BO -Is bash

In this case, it is strongly recommended to setup first the GCC environment first:

. /afs/cern.ch/sw/lcg/external/gcc/4.3.2/x86_64-slc5-gcc43-opt/setup.shChange

x86_64 to your needs (i686, ia32).

I assume a directory tree like this:

$HOME $HOME/local $HOME/local/bin $HOME/local/lib $HOME/local/share $HOME/development/analysis1 $HOME/development/analysis2 $HOME/development/...We will download the code in

$HOME/development. The installation will put the headers, some tools and the libraries in $HOME/local/ subdirectories.

Remember to add $HOME/local to your path. With BASH you can do it this way:

export PATH=$HOME/local/bin:$HOME/local/share:${PATH}

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$HOME/local/lib

Library Dependencies

The framework needs a number of libraries. Most of them are found inside ATHENA so we strongly suggest to setup the ATHENA environment first. (read the ATLAS Workbook first if you don't know what we're talking about!). For example:export AtlasSetup=/afs/cern.ch/atlas/software/dist/AtlasSetup alias asetup='source $AtlasSetup/scripts/asetup.sh' asetup 17.0.3

Non-ATHENA Installation

Go to$HOME/analysis and download the needed packages IF NEEDED:

First install FastJet ( websitecd $HOME/src wget http://www.lpthe.jussieu.fr/~salam/fastjet/repo/fastjet-2.4.4.tar.gz tar -xzvf fastjet-2.4.4.tar.gz cd fastjet-2.4.4 ./configure --prefix=$HOME/local --enable-allplugins make make installNow install BAT: Bayesian Analysis Toolkit (website

cd $HOME/src wget http://www.mppmu.mpg.de/bat/source/BAT-0.4.3.tar.gz tar -xzvf BAT-0.4.3.tar.gz cd BAT-0.4.3 ./configure --prefix=$HOME/local make make install export BATINSTALLDIR=$HOME/local

KLFitter (MANDATORY!)

cd $HOME/src svn co svn+ssh://YourCERNUserID@svn.cern.ch/reps/atlasgrp/Institutes/Goettingen/KLFitter/tags/KLFitter-00-05-00 KLFitter #or newer cd KLFitterInterlude: you have to modify the KLFitter

library/Makefile and extras/Makefile. Add /include to the definition of BATCFLAGS this way

BATCFLAGS = -I$(BATINSTALLDIR)/includeBear in mind that this may change according to your environment. You might also want to change

BATINSTALLDIR for the ATHENA environment, e.g.:

export BATINSTALLDIR=$ATLAS_EXTERNAL/BAT/0.3.2_root5.28.00a/i686-slc5-gcc43/Now go on with the compilation:

make make extrasNow make a symbolic link to

libKLFitter.so:

ln -s $HOME/src/KLFitter/library/libKLFitter.so $HOME/local/lib/. ln -s $HOME/src/KLFitter/extras/libKLFitterExtras.so $HOME/local/lib/.If you prefer, you can add this directory to

$PATH instead:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HOME/src/KLFitter/libraryIn any case set:

export KLFITTERROOT=$HOME/src/KLFitter

Installing the Jigsaw Analysis Framework

You may need to adjustMakefile.inc according to your environment (e.g. check some path variabiles such as PREFIX or FASTJET ).

Now you can download and compile our framework:

cd $HOME/analysis svn co svn+ssh://YourCERNUserID@svn.cern.ch/reps/atlasgrp/Institutes/Bologna/AnalysisFramework/trunk Jigsaw cd JigsawNo

configure script is provided (yet). You have to export KLFITTERROOT and to set the variable PREFIX according to your environment while issuing make (you can also modify it for good in Makefile.inc). In our case we will keep the default:

export KLFITTERROOT=$(HOME)/src/KLFitterNow you can compile the framework:

make -j<NumberOfCores, eg 16> PREFIX=$(HOME)/local

export ANALYSISFRAMEWORKROOT=${HOME}/local #or your modified PREFIX

The installation process added a library, a tools and the headers to $HOME/local$ subdirectories. Make sure that they are there! It is important that those paths are present in $LD_LIBRARY_PATH$, otherwise the system will not recognize the analysis framework.

TutorialAnalysis

We provide a skeleton for your analyses.cd $HOME/analysis svn co svn+ssh://YourCERNUserID@svn.cern.ch/reps/atlasgrp/Institutes/Bologna/TutorialAnalysis/trunk TutorialAnalysis cd TutorialAnalysis makeYou can modify

makeLinks2Datasets.sh or just create a directory containing symbolic links to your datasets. In our example try to run:

Jigsaw -a Tutorial -n 1000 -d /gpfs_data/storm/atlas/localgroupdisk/user/rsoualah/mc10_7TeV/user.rsoualah.mc10_7TeV.105860.TTbar_PowHeg_Jimmy.merge.NTUP_TOP.e600_s933_s946_r2302_r2300_p572.28July_v5_0.110801015827/ -w RachikThe

-d option specifies the directory containing the input ROOT files, while the -f option specifies a text file containing a list of data files to be processed (useful when running in parallel on a cluster). The -p option specifies the configuration file. The default one is share/Analysis.xml. Some other examples are present for your convenience.

If everything goes smoothly, you should see something like this:

File contains 9992 events

Initialize tree for file no. 0

100 / 1000(10%)

200 / 1000(20%)

300 / 1000(30%)

400 / 1000(40%)

500 / 1000(50%)

600 / 1000(60%)

700 / 1000(70%)

800 / 1000(80%)

900 / 1000(90%)

Finalizing analysis...

Final statistics for selection ELE:

All events ( 0) 1000 ( 1000)

Dummy Cut ( 1) 1000 (prev: 1.00) (eff: 1.00) (unw: 1000)

EF_e15_medium ( 2) 329 (prev: 0.33) (eff: 0.33) (unw: 329)

# pvx (4 trk) >= 1 ( 3) 329 (prev: 1.00) (eff: 0.33) (unw: 329)

SL_el ( 4) 186 (prev: 0.57) (eff: 0.19) (unw: 186)

MET > 35 GeV ( 5) 135 (prev: 0.73) (eff: 0.14) (unw: 135)

W_mT > 25 GeV ( 6) 119 (prev: 0.88) (eff: 0.12) (unw: 119)

4j25 ( 7) 71 (prev: 0.60) (eff: 0.07) (unw: 71)

1 bTag > 5.85 ( 8) 50 (prev: 0.70) (eff: 0.05) (unw: 50)

Jet cleaning ( 9) 50 (prev: 1.00) (eff: 0.05) (unw: 50)

Cut flow ELE dumped to histogram ELE_cutflow

Final statistics for selection MUON:

All events ( 0) 1000 ( 1000)

Dummy Cut ( 1) 1000 (prev: 1.00) (eff: 1.00) (unw: 1000)

EF_mu10_MSonly EF_mu13 EF_mu13_tight ( 2) 350 (prev: 0.35) (eff: 0.35) (unw: 350)

# pvx (4 trk) >= 1 ( 3) 350 (prev: 1.00) (eff: 0.35) (unw: 350)

SL_mu ( 4) 208 (prev: 0.59) (eff: 0.21) (unw: 208)

MET > 20 GeV ( 5) 197 (prev: 0.95) (eff: 0.20) (unw: 197)

MET+W_mT > 60 GeV ( 6) 184 (prev: 0.93) (eff: 0.18) (unw: 184)

4j25 ( 7) 114 (prev: 0.62) (eff: 0.11) (unw: 114)

1 bTag > 5.85 ( 8) 86 (prev: 0.75) (eff: 0.09) (unw: 86)

Jet cleaning ( 9) 86 (prev: 1.00) (eff: 0.09) (unw: 86)

Cut flow MUON dumped to histogram MUON_cutflow

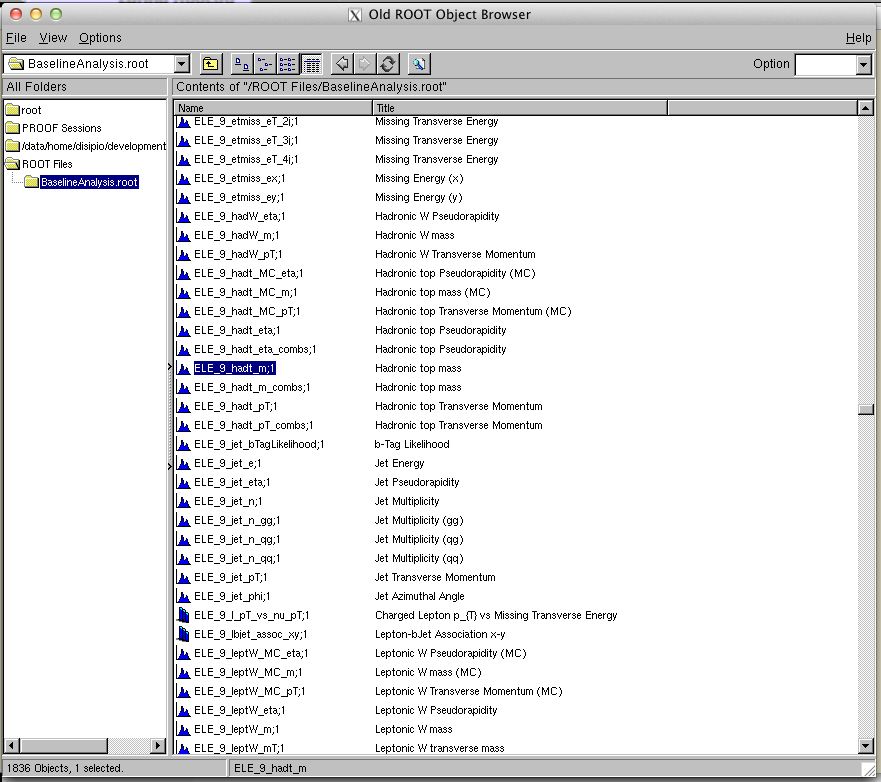

Histograms have been saved in a file called by default BaselineAnalysis.root. You can change its name using the -o <OutputFileName.root> option.

And Now?

Now that we make sure that Jigsaw runs on your system, you are free to create your own analysis. We set up three ways to do this.Modify TutorialAnalysis

You can tweakTutorialAnalysis according to your needs, adding new event manipulators and histogram fillers. This analysis should work correctly just out-of-the-box. The makefiles provided by us should be smart enough to recognize and compile them if their names contain *EventManipulator* and *HistogramFiller*. Two not-too-basic examples are provided.

cd $HOME/analysis svn co svn+ssh://YourCERNUserID@svn.cern.ch/reps/atlasgrp/Institutes/Bologna/TutorialAnalysis/trunk MyAnalysis cd MyAnalysis make

Create a Stub Analysis

Just issue Jigsaw's analysis-creation script (in this example it is called TTbar):cd $HOME/analysis jigsaw-createCutBasedAnalysis.py TTbar cd TTbarAnalysis makeThe script creates the following files in

TTbarAnalysis:

TTbarAnalyzer.h TTbarAnalyzer.cpp Makefile.inc Makefile cardfiles/Analysis.xml share/histograms.xmlAs usual, this stub analysis should run out of the box. We will add some scripts for the creation of event manipulators and histogram fillers asap. Stay tuned!

Run Default CutBasedAnalysis

If you don't need to perform special operations such as event-per-event dump, there is no need at all to code an analysis library. This part is still in development and not all the features are enabled yet. Anyway, if your analysis is based on default event manipulators and histogram fillers, you just neeed to provide thexml configuration files (usually cardfiles/Analysis.xml and share/histograms.xml ). Just run:

#> Jigsaw --params-xml cardfiles/Analysis.xml -nevtmax 100 [14:48:56] INFO: Config: Running on 100 events [14:48:56] INFO: Jigsaw: Found WrapperNtuplePlugin: libSTDHEPWrapperNtuplePlugin.so / STDHEPWrapperNtuplePlugin [14:48:56] INFO: Found custom ntuple wrapper STDHEPWrapperNtuplePlugin [14:48:56] INFO: Jigsaw: Found Analyzer: libBlackHoleRemnantsAnalyzer.so / BlackHoleRemnantsAnalyzer [14:48:56] INFO: Plugin loaded... Initializing BlackHoleRemnantsAnalyzer ...blablabla...

How to Deal with Runtime Options

The most important configuration file is the one defining the runtime behaviour of your analysis. The default name isshare/Analysis.xml (take a look). The basic syntax is:

<analysis name="MyAnalysis" >

<histograms>

<schedule hfiller="FillEventWeight" />

<schedule hfiller="FillFinalStateObjects" />

<histograms>

<cutflow key="ELE">

<cut type="trigger" L1="" L2="" EF="EF_e20_medium" />

<cut type="leptoncut" ptmin=25. etamax=2.5 n=1 flavor="ele" >

<cut type="jetcut" n=2 ptmin=25 />

</cutflow>

</analysis

In many cases, you will run on ROOT ntuples. To speed up the execution time, you can specify in a separate cardfile (e.g. share/MyWrapper.xml) the branches you want to activate for a given wrapper. You could also assign more than one configuration to the same wrapper: the same wrapper could be useful for real data, data/MC comparison or a dedicated truth Monte Carlo study. In this case, the config file looks like this:

<ntuple wrapper="MyWrapper" >

<branches>

<activate branch="el_pt" />

<activate branch="el_eta" />

<activate branch="el_phi" />

<activate branch="el_e" />

</branches>

</ntuple>

FAQ

Jigsaw has too many options!

Jigsaw --helpOr, if you sourced

jigsaw-setenv.sh or jigsaw-autocompletion.sh, just press TAB while issuing the command.

I can't remember all those keys for default cuts, event manipulators, histogram fillers and ntuple wrappers. How do I find out?

jigsaw-config [--installdir] [--cuts|-c] [--event-manipulators|-em] [--ntuple-wrappers|-nw] [--histogram-fillers|-hf]

Registered cuts: 25 * btag * dummy * emuoverlap * etmiss * hfoverlap * jetcleaning * jetcut * larError * leptoncut * leptonveto * mTW * noncollisionbkg * optjetcut * pvx * recoparticlecut * recoquantitycut * sl * svx * topchess * toptriangular * toptrigger * toptruth * trigger * triggermatch * zmass

What if I want to run Jigsaw on the grid with Panda/prun?

Try to download our submission packageAnalysisOnGrid. Customize user.config according to your local setup. This version is highly developmental and we can give only a very limited support. You asked for that!

svn co svn+ssh://YourCERNUserID@svn.cern.ch/reps/atlasgrp/Institutes/Bologna/AnalysisOnGrid/trunk AnalysisOnGridThe configuration file looks like this:

# define configurable options framework = Jigsaw grid_user = RiccardoDiSipio sw_dir = /home/disipio/development framework_src = /home/disipio/development/Jigsaw install_dir = /home/disipio/local grl = share/Top_GRL_K.xml params = cardfiles/MCChallenge_2011_EPS.xml wrapper = Rachik klfitter = /home/disipio/KLFitter compile = local # remote | local | afsYou are very likely to modfiy options

grid_user, sw_dir, framework_src, install_dir and klfitter (perhaps wrapper, too). We suggest to leave the compile option untouched if you are running on a Linux machine.

To run:

./submit_analysis -a AnalysisName -r RunFile.listDuring the submission, a file called

logs/submission_YYYYMMDDHHMM.log is created. It can be used for bookkeeping.

-- RiccardoDiSipio - 22-Sep-2011 - output_historgams.png:

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

output_historgams.png | r1 | manage | 63.5 K | 2011-09-22 - 15:59 | RiccardoDiSipio |

Topic revision: r14 - 2011-12-09 - RiccardoDiSipio

Webs

- ABATBEA

- ACPP

- ADCgroup

- AEGIS

- AfricaMap

- AgileInfrastructure

- ALICE

- AliceEbyE

- AliceSPD

- AliceSSD

- AliceTOF

- AliFemto

- ALPHA

- Altair

- ArdaGrid

- ASACUSA

- AthenaFCalTBAna

- Atlas

- AtlasLBNL

- AXIALPET

- CAE

- CALICE

- CDS

- CENF

- CERNSearch

- CLIC

- Cloud

- CloudServices

- CMS

- Controls

- CTA

- CvmFS

- DB

- DefaultWeb

- DESgroup

- DPHEP

- DM-LHC

- DSSGroup

- EGEE

- EgeePtf

- ELFms

- EMI

- ETICS

- FIOgroup

- FlukaTeam

- Frontier

- Gaudi

- GeneratorServices

- GuidesInfo

- HardwareLabs

- HCC

- HEPIX

- ILCBDSColl

- ILCTPC

- IMWG

- Inspire

- IPv6

- IT

- ItCommTeam

- ITCoord

- ITdeptTechForum

- ITDRP

- ITGT

- ITSDC

- LAr

- LCG

- LCGAAWorkbook

- Leade

- LHCAccess

- LHCAtHome

- LHCb

- LHCgas

- LHCONE

- LHCOPN

- LinuxSupport

- Main

- Medipix

- Messaging

- MPGD

- NA49

- NA61

- NA62

- NTOF

- Openlab

- PDBService

- Persistency

- PESgroup

- Plugins

- PSAccess

- PSBUpgrade

- R2Eproject

- RCTF

- RD42

- RFCond12

- RFLowLevel

- ROXIE

- Sandbox

- SocialActivities

- SPI

- SRMDev

- SSM

- Student

- SuperComputing

- Support

- SwfCatalogue

- TMVA

- TOTEM

- TWiki

- UNOSAT

- Virtualization

- VOBox

- WITCH

- XTCA

Welcome Guest Login or Register

or Ideas, requests, problems regarding TWiki? use Discourse or Send feedback